Randomization: The (Almost) Magical Solution to Too Many Surveys

Randomizing the timing of surveys makes them way more useful and less burdensome.

What’s the best way to reduce the burden of collecting data? Don’t ask users in the first place. This is exactly what random sampling does: instead of collecting data from a full population, you collect data from a random subset. The magic of randomization is that you can get answers to your questions while collecting data on a fraction of all your users.

In the age of cheap data storage, there’s a temptation to collect everything, not just small samples. What if you need it later? Collecting everything is great for analysts who fetishize huge datasets. And for data that is collected passively, such as website logs, there’s no issue. But when users have to input data into a form or complete a survey, there’s always a burden. Whenever there’s a burden on a user, it’s always worth asking if you need these data on EVERY user.

The answer may be “YES” for a lot of information. If you’re running an e-commerce business, you need to have every user’s address to ship them products. However, that same business might not need to survey all its customers about a new feature. A random subset of users to get a few hundred responses is enough to accomplish the task. The users who weren’t sampled will just have one less annoying email in their inbox.

Random sampling does more than reduce clutter, it can dramatically increase the usefulness of data collection by fighting back against survey fatigue. If you don’t ask, you don’t have to worry about exhausting your users’ goodwill. The e-commerce business that asks for feedback about Feature A from one subset of their customers can ask about a Feature B to a different subset without worrying about survey fatigue.

This goes for internal data collection as well. One of the worst offenders is the dreaded employee survey. I’ll deep dive into numerous issues with these surveys in future posts, but right now I’ll focus on their infrequency. Most organizations do these once (maybe twice?) a year. I assume HR departments don’t do it more often to avoid revolts. These surveys are long, awkward, and unpleasant. Response rates will almost certainly plummet if fielded more frequently.

Infrequency is a problem. For a growing organization, a year (or even six months) is a long time. There’s typically a lot of turnover in scaling organizations. There are also new employees being brought on all the time. Any survey data collected is out of date very quickly. Moreover, it’s a costly thing to do; something that takes 30-45 minutes of every employee’s time is a considerable timesink.

For now, let’s assume that the data could be valuable but only for a short time. How do you collect it more often? You could incentivize employees to take these surveys. That’s expensive. You could annoy them or shame them. That’s morale-depleting counterproductive to an important goal of these surveys.

The answer is randomization.

Rather than doing a single point-in-time survey of the entire organization, do more frequent surveys of random subsets. It’s roughly the same amount of effort and it provides a better picture of what’s going on.

Rather than just going through the math, I thought it’d be more helpful to illustrate with a 2018 employee survey from the City of Tempe, a suburb of Phoenix with approximately 1,600 employees. It’s a long survey - over 50 questions - that they have conducted approximately every other year. Below is one (of several) pages:

I took a very standard question from this survey “My immediate supervisor treats me with respect.” This question is answered on a 1-5 scale - a very common format.

Below is the distribution of responses. It’s very uneven, which is typical for these types of surveys and questions. After all, if you felt very disrespected by your boss, you’d probably look for another job.

The average across the entire department is 4.2 on a 5-point scale. Here’s the full distribution:

The right counterfactual to investigate is what would have happened if they had only asked a fraction of the department to complete the survey. You’re unlikely to get the exact distribution of responses back, but you could get close enough where it wouldn’t make a difference for decision-making or interpreting the data.

We can actually do this by pretending we only had a fraction of the data in our possession. Essentially, we can mimic what would happen if magically a random selection of data disappeared. Then we can investigate the data and see if the conclusions are different than what we would make with the full data set.

Simulation works great with survey data because there are uneven (also known as skewed) distributions of responses with upper and lower bounds. In our example, the responses have a mean of 4.2 (on a 5-point scale) and a standard deviation of about 1. We know that the highest possible average you can get on a 5 point scale is 5, so it doesn’t make a ton of sense to use statistical methods that can go above this. That’s less of a problem if you weren’t near the upper (or lower) boundary, but in this case, 4.2 is pretty close to 5. A simple simulation accommodates these constraints without having to do any complex math.

We can do the simulation in a few simple steps:

Step 1) Randomly select 25%1 of the responses (234 out of 937)

Step 2) Calculate the mean response for this subsample

Step 3) Repeat Step 1 and Step 2 10,000 times

Step 4 ) Plot the distribution of the sub-sample averages and compare them to the population average

What we see below is that a 25% sample here does a great job. The average of the full sample is the white dotted line, not coincidentally in the center of the distribution. In fact, about 90% of the simulated samples fall between 4.1 and 4.3. If a manager wanted to get a sense of how the City of Tempe was doing on this score, they’d get nearly identical information by only asking 25% of the employees.

Summary statistics are helpful, but most people want to know about breakdowns at least across two dimensions. For example, let’s say you were interested if there was a gender disparity in this respect question. This might be important to know for prioritizing gender debiasing training programs and diagnosing retention issues among other things.

Below I’ll do the same simulation exercise with a gender breakdown.2 There are more male employees than female employees in these data, so it’s helpful to scale the number of respondents in each group. Therefore, the Y-axis is in percent on the graph below rather than just a count of responses.

We see small differences in the percentage of male and female employees here. The averages for each group are pretty similar: 4.34 for males and 4.20 for females. Let’s see how a 25% random sample affects this.

Most of the simulations are very close to the actual average for each subgroup (the blue dotted line for females and the pink dotted line for males). There is a larger range of values in the breakdowns because the number of people in each group is about half of the full sample. The smaller the subgroups, the more likely you are to get funky draws of employees that feel more respected (or less) than the full sample.

Now let’s compare the distributions to each other. The male and female simulations overlap quite a bit. It would be possible - just by chance - to make this small gap in respect disappear or appear larger than it actually is in the full population.

But the real question is does any of this matter to managers making decisions with these data? In this case, my answer is no.

Let’s take a step away from the comparisons. I think most people would agree that if most employees feel respected by their supervisors, that’s probably a good thing. The vast majority of simulated sampling would lead you to this conclusion for both genders.

Turning to the potential gender gap, a 0.1 difference on a 5 point scale is very small in absolute terms. I’d be much more concerned if it was closer to half a point. As the graph below shows, the simulated runs have differences between genders that are greater than 0.5 in less than 1% of simulated samples. It’s actually more common to find females having a higher score than males than to find a large gender gap due to the sampling.

Overall, my conclusion here is that a 25% sample would work really well for this employee survey for top-level numbers and simple breakdowns.

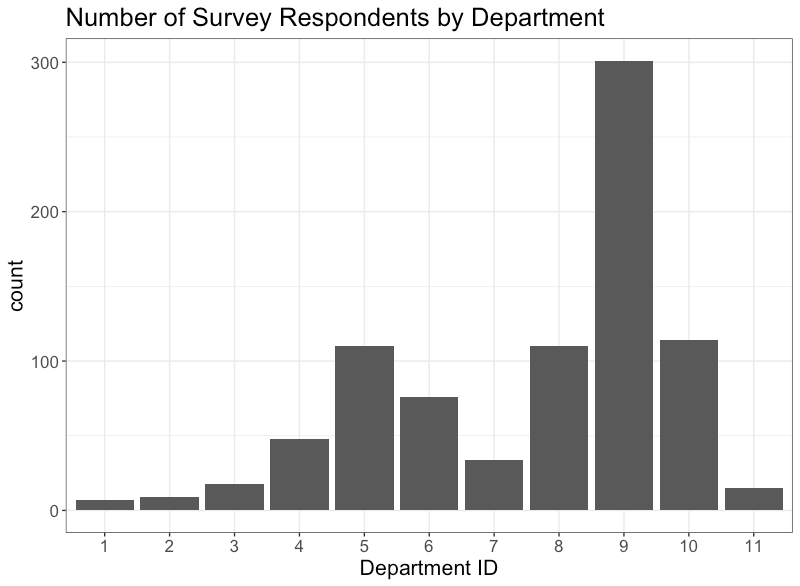

That said, more complex cuts of the data may be tougher. For example, managers might be interested in the performance of different departments. That distribution is much more uneven and there’s a bunch of very small departments.

I’d still advocate for random sampling in this case. Yes, you can’t do a breakdown for every department each time you field the survey. But that’s ok - you can still have topline measures for the organization and some more balanced demographics, like tenure and gender. When you need to analyze these smaller groups you can combine data from multiple waves of surveys.

Having data pooled across multiple time periods can give you higher quality data than a point in time. For example, if you wanted to get a sense of supervisor respect across the entire year, I’d rather have data that include surveys fielded across multiple months rather than concentrated in a single one.

Randomization is your friend here as well, it will help to cancel out potential seasonality effects. I’d bet that companies that do their employee surveys around bonus time get more positive scores. Conversely, if they align with performance review periods, they might be lower. Randomization helps to lessen these effects.

I wish I could provide hard and fast rules for how to figure out what’s the best proportion of an audience to randomize. Sadly, I can’t. It’s really situationally dependent and is influenced by the distribution responses.

That said, I can suggest an approach to figure it out. If you have historical data, simulating randomization, just as I did with the City of Tempe survey, is a great place to test things. Past data isn’t always representative of future data, but it’s often quite close. It will at least provide you guardrails against egregious mistakes. If you don’t have historical data, you can start conservatively and always split further. If you’re using a full population sample now, why not try a 50% sample and see how that goes? If it goes well, perhaps you can split that in half again?

As far as tools, knowing a little bit of basic programming goes a very long way here. But even if you’re allergic to coding, have no fear. You can actually do it in Excel or Google Sheets - it just takes some copying and pasting.3

I even set up a template here for it with some fake employee email addresses:

https://docs.google.com/spreadsheets/d/1CnCnQ0oh84GzBbUppLo2VaO7UWscClR-Sk8kmN7s1-M/edit?

All you have to do is create random subsets with some basic filtering.

Have thoughts? Leave a comment below.

For the stats nerds, I sampled without replacement for the purposes of the counterfactual exercise. If I wanted to get bootstrapped confidence intervals, I would have used replacement.

There is a gender non-conforming category in the data, but it’s 3 respondents so I omitted it from this analysis. You don’t need statistics to understand the experience of 3 people - just look at the data.

Google Sheets and Excel (to my knowledge) don’t allow you to configure “seeds” to keep the randomly assigned groupings constant.